Tensors: The Building Blocks of Deep Learning

What are tensors?

Tensors are the data structure used by machine learning systems. A tensor is a container for numerical data. It is the way we store the information that we’ll use within our system.

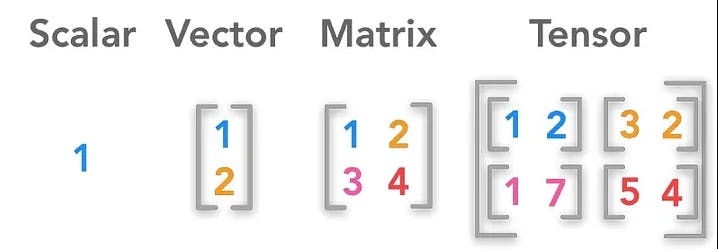

Tensors can have different dimensions, which determine their shape and rank. A tensor's rank is the number of dimensions it has, and its shape is the size of each dimension. For example:

A scalar is a tensor of rank 0 that contains a single number e.g. -> (5).

A vector is a tensor of rank 1 that contains a sequence of numbers e.g. ->([4, 6, 8]).

A matrix is a tensor of rank 2 that contains a grid of numbers e.g. -> ([[0, 1, 1], [2, 3, 3], [1, 3, 2]]).

An image is a tensor of rank 3 that contains a stack of matrices representing different channels (e.g. RGB) or features (e.g. edges)

e.g. -> ([[[255, 0, 0], [0, 255, 0], [0, 0, 255]], [[128, 128, 128], [64, 64, 64], [32, 32, 32]], [[0, 0, 0], [255, 255, 255], [128, 128, 128]]]).

Tensors can have higher ranks as well, depending on the complexity of the data. For example:

A video is a tensor of rank 4 that contains a sequence of images representing different frames (e.g. [[[[255, ...], ...], ...], [[[128,...], ...], ...], ...]).

A text is a tensor of rank 2 or higher that contains a sequence of words or characters encoded as numbers (e.g. [[72,101,...], [87,...], ...]).

Why are tensors important for deep learning?

Tensors are important for deep learning because they enable mathematical operations to be performed in an optimized way on a CPU or GPU. In deep learning, we need performance to compute a lot of matrix multiplications in a highly parallel way. These matrices (and n-dimensional arrays in general) are generally stored and processed on GPUs to speed up training and inference times.

Tensors are also the basic unit of computation in deep learning frameworks such as TensorFlow and PyTorch. These frameworks allow us to define and execute graphs of operations on tensors in an efficient and flexible way. For example:

We can use tensors to store the input data (e.g. images), the weights and biases of the neural network layers, and the output predictions (e.g. probabilities).

We can use tensors to perform operations such as convolution, activation functions, loss functions, and backpropagation on the neural network layers.

We can use tensors to update the weights and biases of the neural network layers using optimization algorithms such as gradient descent.

How can we use tensors in deep learning?

To use tensors in deep learning, we need to learn how to create them, manipulate them, and perform operations on them using a deep learning framework such as TensorFlow or PyTorch.

Here are some examples of how to use tensors in Python using TensorFlow:

# Import TensorFlow

import tensorflow as tf

# Create a scalar tensor

tensor_0D = tf.constant(5)

print("Scalar tensor:", tensor_0D)

# Create a vector tensor

tensor_1D = tf.constant([4,6,8])

print("Vector tensor:", tensor_1D)

# Create a matrix tensor

tensor_2D = tf.constant([[0,1],[2,3],[4,5]])

print("Matrix tensor:", tensor_2D)

# Create an image tensor

tensor_3D = tf.constant([[[255,...],[...]],...])

print("Image tensor:", tensor_3D)

# Perform operations on tensors

tensor_sum = tf.add(tensor_1D,tensor_2D)

print("Sum of tensors:", tensor_sum)

tensor_prod = tf.matmul(tensor_2D,tensor_2D)

print("Product of tensors:", tensor_prod)

tensor_trans = tf.transpose(tensor_2D)

print("Transpose of tensor:", tensor_trans)

# Access elements of tensors

tensor_elem = tensor_2D[1,0]

print("Element of tensor:", tensor_elem)

tensor_slice = tensor_2D[:,1]

print("Slice of tensor:", tensor_slice)

# Reshape tensors

tensor_reshaped = tf.reshape(tensor_2D, (2,3))

print("Reshaped tensor:", tensor_reshaped)

Conclusion

Tensors are the data structure used by machine learning systems to store and process numerical data. They can have different dimensions, shapes, and ranks, depending on the complexity of the data. Tensors are important for deep learning because they enable mathematical operations to be performed in an optimized way on a CPU or GPU. Tensors are also the basic unit of computation in deep learning frameworks such as TensorFlow and PyTorch, which allow us to define and execute graphs of operations on tensors efficiently and flexibly.

I hope this blog post helped you understand what tensors are and how they can be used in deep learning. If you have any questions or feedback, please let me know in the comments below.

Thanks :)