A Deep Dive into Recurrent Neural Networks

Recurrent Neural Networks (RNNs) play a critical role in the field of deep learning and artificial intelligence, as they possess unique capabilities for processing sequential data. This blog post will explore RNNs in detail, shedding light on their structure, functionality, applications, and variations.

1. Understanding Recurrent Neural Networks:

1.1 What is a Recurrent Neural Network?

A Recurrent Neural Network is a type of artificial neural network explicitly designed to handle sequential data patterns. In contrast to traditional feedforward neural networks, where the information flows in one direction without any recurrence, RNNs maintain a hidden state that can capture information from previous time steps.

1.2 The Basic Structure of RNNs

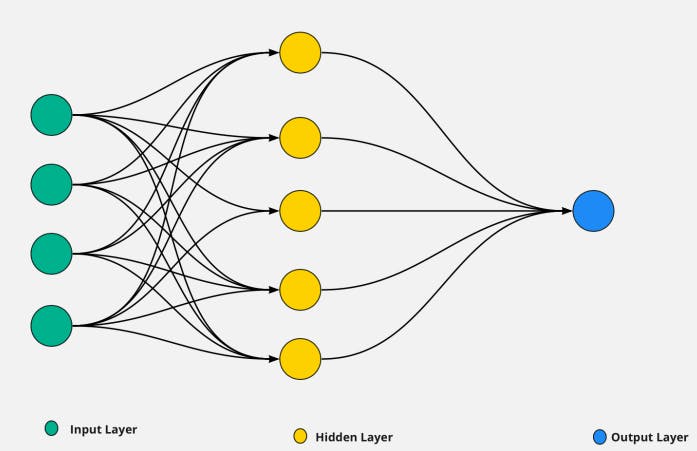

Recurrent Neural Networks consist of three primary components: input nodes, hidden nodes (known as the recurrent hidden state), and output nodes.

The diagram below illustrates the basic structure of a simple RNN:

2.Working Mechanism of an RNN:

2.1 Forward Propagation

During forward propagation, an input sequence is fed into the RNN at each time step. The hidden state is updated based on both the input data and its previous hidden state value. The hidden state then produces an output for that specific time step.

2.2 Training an RNN

Training an RNN involves adjusting its weights according to an optimization algorithm (e.g., Gradient Descent) while minimizing a loss function (e.g., Mean Squared Error or Cross-Entropy). This adjustment is done using backpropagation through time (BPTT), a modified version of backpropagation specifically tailored for recurrent neural networks.

3.Applications of Recurrent Neural Networks:

RNNs have been successfully utilized in various tasks that involve processing sequential data, including but not limited to:

Text generation

Language modelling

Machine translation

Speech recognition

Sentiment analysis

Time series prediction

4.RNN Variants and Enhancements:

Over the years, several RNN variants have emerged to address the challenges posed by long-range dependencies and vanishing gradients. Some popular designs include:

4.1 Long Short-Term Memory (LSTM)

LSTM networks are a specific type of RNN that can better capture long-term dependencies by employing gated memory cells.

4.2 Gated Recurrent Units (GRUs)

GRU networks are a simplified version of LSTMs, retaining their ability to capture long-term dependencies while reducing computational complexity.

5.Challenges and Limitations of Recurrent Neural Networks:

Despite their widespread success, RNNs face certain challenges and have limitations, such as:

Difficulty in modelling long-range dependencies due to vanishing gradient problem

High computation time for training large-scale and deep RNNs

Conclusion

Recurrent Neural Networks have paved the way for significant advancements in the field of deep learning, specifically in handling sequential data. Their ability to retain information from previous time steps makes them well-suited for tasks such as language modelling, machine translation, and speech recognition.

Throughout this blog post, we have explored the structure and functioning of RNNs, including their forward propagation and training processes. We have also highlighted some of the applications where RNNs have demonstrated remarkable performance. Next we will cover what steps can we take to tackle the limitations of RNNs. So stay tuned.

Thanks :)